2024-03-01 14:44:21

2024-04-01 17:10:03

#Datenhörnchen:

Warum die flauschigen Helden des Internets den Durchblick haben

Im digitalen Dschungel des Internets gibt es eine Spezies, die oft übersehen wird: die Datenhörnchen. Die flauschigen Kreaturen haben sich in den Tiefen des Cyberspace angesiedelt und sind zu wahren Helden geworden, wenn es um Datenschutz und Sicherheit geht. Doch warum sind gerade sie so wicht…

2024-03-01 13:55:47

Filing: Elon Musk says OpenAI is "not just developing but is actually refining an AGI to maximize profits for Microsoft" and claims GPT-4 constitutes an AGI (Manish Singh/TechCrunch)

https://techcrunch.com/2024/03/01/elon-musk-openai-sam-altman-court…

2024-05-01 06:48:59

Do Large Language Models Understand Conversational Implicature -- A case study with a chinese sitcom

Shisen Yue, Siyuan Song, Xinyuan Cheng, Hai Hu

https://arxiv.org/abs/2404.19509 https://arxiv.org/pdf/2404.19509

arXiv:2404.19509v1 Announce Type: new

Abstract: Understanding the non-literal meaning of an utterance is critical for large language models (LLMs) to become human-like social communicators. In this work, we introduce SwordsmanImp, the first Chinese multi-turn-dialogue-based dataset aimed at conversational implicature, sourced from dialogues in the Chinese sitcom $\textit{My Own Swordsman}$. It includes 200 carefully handcrafted questions, all annotated on which Gricean maxims have been violated. We test eight close-source and open-source LLMs under two tasks: a multiple-choice question task and an implicature explanation task. Our results show that GPT-4 attains human-level accuracy (94%) on multiple-choice questions. CausalLM demonstrates a 78.5% accuracy following GPT-4. Other models, including GPT-3.5 and several open-source models, demonstrate a lower accuracy ranging from 20% to 60% on multiple-choice questions. Human raters were asked to rate the explanation of the implicatures generated by LLMs on their reasonability, logic and fluency. While all models generate largely fluent and self-consistent text, their explanations score low on reasonability except for GPT-4, suggesting that most LLMs cannot produce satisfactory explanations of the implicatures in the conversation. Moreover, we find LLMs' performance does not vary significantly by Gricean maxims, suggesting that LLMs do not seem to process implicatures derived from different maxims differently. Our data and code are available at https://github.com/sjtu-compling/llm-pragmatics.

2024-05-01 13:10:16

Shawna pushed at tangled cables in the bedroom nook doubling as a home office, clearing a path behind her cuboid IKEA wardrobe. She brushed aside plain blue Zoom-call tops, a layer of Target knitwear, pushed firmly through flimsy '00s clubwear she'd never fit again. Back to the real world; "captchas", "Chat GPT", "President Trump", a fading bad dream. Logical, she thought - ornate Edwardian wardrobes lead to Narnia, misassembled IKEA wardrobes lead to c…

2024-04-30 09:46:08

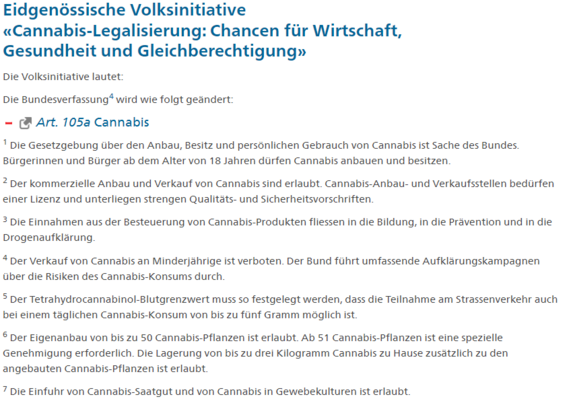

Soeben im Bundesblatt gefunden:

Es gibt wieder mal einen Versuch, #Cannabis in der Schweiz zu legalisieren

Initiativtext:

https://www.fedlex.admin.ch/eli/fga/2024/941/de

2024-02-28 22:54:33

2024-05-01 07:17:29

A Framework for Leveraging Human Computation Gaming to Enhance Knowledge Graphs for Accuracy Critical Generative AI Applications

Steph Buongiorno, Corey Clark

https://arxiv.org/abs/2404.19729 https://arxiv.org/pdf/2404.19729

arXiv:2404.19729v1 Announce Type: new

Abstract: External knowledge graphs (KGs) can be used to augment large language models (LLMs), while simultaneously providing an explainable knowledge base of facts that can be inspected by a human. This approach may be particularly valuable in domains where explainability is critical, like human trafficking data analysis. However, creating KGs can pose challenges. KGs parsed from documents may comprise explicit connections (those directly stated by a document) but miss implicit connections (those obvious to a human although not directly stated). To address these challenges, this preliminary research introduces the GAME-KG framework, standing for "Gaming for Augmenting Metadata and Enhancing Knowledge Graphs." GAME-KG is a federated approach to modifying explicit as well as implicit connections in KGs by using crowdsourced feedback collected through video games. GAME-KG is shown through two demonstrations: a Unity test scenario from Dark Shadows, a video game that collects feedback on KGs parsed from US Department of Justice (DOJ) Press Releases on human trafficking, and a following experiment where OpenAI's GPT-4 is prompted to answer questions based on a modified and unmodified KG. Initial results suggest that GAME-KG can be an effective framework for enhancing KGs, while simultaneously providing an explainable set of structured facts verified by humans.

2024-02-28 22:54:33

2024-05-01 06:49:12

Better & Faster Large Language Models via Multi-token Prediction

Fabian Gloeckle, Badr Youbi Idrissi, Baptiste Rozi\`ere, David Lopez-Paz, Gabriel Synnaeve

https://arxiv.org/abs/2404.19737 https://arxiv.org/pdf/2404.19737

arXiv:2404.19737v1 Announce Type: new

Abstract: Large language models such as GPT and Llama are trained with a next-token prediction loss. In this work, we suggest that training language models to predict multiple future tokens at once results in higher sample efficiency. More specifically, at each position in the training corpus, we ask the model to predict the following n tokens using n independent output heads, operating on top of a shared model trunk. Considering multi-token prediction as an auxiliary training task, we measure improved downstream capabilities with no overhead in training time for both code and natural language models. The method is increasingly useful for larger model sizes, and keeps its appeal when training for multiple epochs. Gains are especially pronounced on generative benchmarks like coding, where our models consistently outperform strong baselines by several percentage points. Our 13B parameter models solves 12 % more problems on HumanEval and 17 % more on MBPP than comparable next-token models. Experiments on small algorithmic tasks demonstrate that multi-token prediction is favorable for the development of induction heads and algorithmic reasoning capabilities. As an additional benefit, models trained with 4-token prediction are up to 3 times faster at inference, even with large batch sizes.